| |

TESTING WHILE CODING

How to think, plan and run tests in order to meet all quality requirements BY JOHN JAN POPOVIC

TESTING WHILE CODING

------------------------------------------------------

TESTING WHILE CODING

------------------------------------------------------

The proof that all expected technical requirements are completely satisfied is performed not only during the final product testing, than also during the early stages of the development -- it is the fundamental idea behind the iterative software growth stages. Testing while coding is conceptual work not easy to establish. Planning of the iterative software growth stages, and the creation tests case examination scenario, context, input parameters and desired output data must be done upfront, before the coding starts.

At the end of each short iterative cycle we will obtain failure detection diagnostics. Subsequently we must describe, design and create the refinement proposal to fix detected failure. At the end the code patch must be crafted, implemented and deployed.

If no further failures have been detected we should move to a new and more complicated test case. And so on, until all functionality and quality requirements are meet or exceed requested performance.

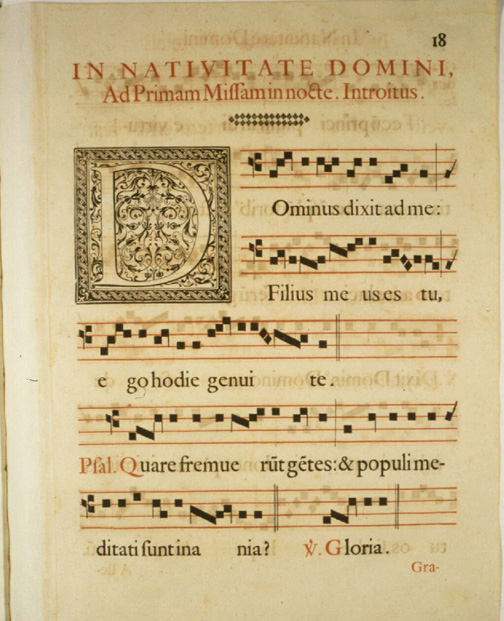

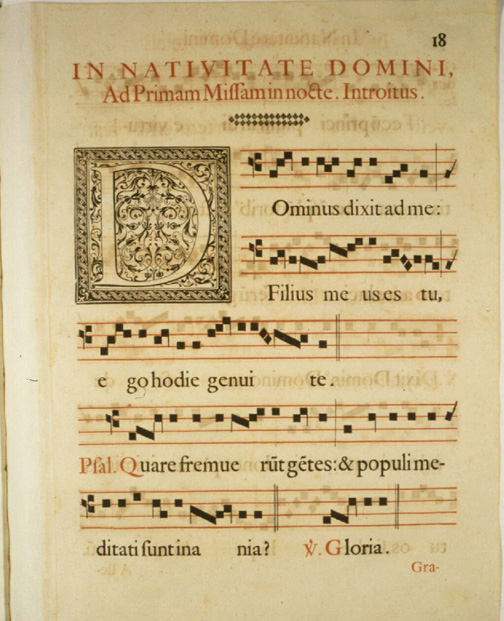

While crafting the software I am inspired by the precisions and excellence of ancient Swiss watch-making craftsmen and the devotion of medieval monks while illuminating manuscripta.

Quality requirements

Quality Assurance QA test planning, precedes code crafting, growth and refinement. We must creates a test plan and populate it with test cases from trivial to complicated one with desired results outcome. We should also relate test cases to functional requirements, deploy a test plan, start executing test cases and designate them as successful or failed. We must create the software which generates reports on our test cases.

During the development we should also be able to:

- specify valid test parameters

- create a sequence of test instances in a batch,

- MILESTONE development planning, starting with simple but functional code

- monitor test case execution in a Log file, and report success, failure, and a type of error or warning during and after the execution of the code

- add and to remove particular test cases from the test batch

- do requirements tracking

- track the software growth, mutations and evolution on the roadmap milestones

- do bug reporting and solution tracking

The Milestones and Incremental Development process is not only a great way to map out complex functionality, it's also IMPORTANT to test out critical features before they're launched. During our INCREMENTAL DEVELOPMENT process we can make some changes in the application structure in an effort to better organise bug-tracking, development and feature releases.

Pre-Alpha

Pre-alpha phase refers to all activities performed prior to alpha testing. These activities can include collection of real life experimental data, of real life routine scenarios, prototyping, pre-disaster scenario symptoms early detection, solved and unsolved problems database, requirements analysis, data structures analysis, valid and invalid data patterns, error detection and correction and algorithm design.

In software development, there are more versions of pre-alpha prototypes. Milestone versions include specific sets of incremental features, which are released as soon as the feature functionality is correctly performed.

FEATURES SHOULD GROW VERTICALLY AND NOT HORISONTALLY

Vertical vs. Horisontal development philosophy. Vertical development philosophy is referred to small set of perfectly performed features, where all quality requirements are implemented. We are committed to provide functionality that meet or exceed promised performance.

---------------------------------------------------------------------------------------------------

PLANNING AND BIG PICTURE ORIENTATION: Where you're, and where are you going?

---------------------------------------------------------------------------------------------------

★ Programming without an overall architecture or design in mind is like exploring a cave with only a flash-light: You don't know where you've been, you don't know where you're going, and you don't know quite where you are.

- Danny Thorpe, former Chief Architect of the Delphi programming language

----------------------------------------------------------------------

FROM TRIVIAL TO IMPOSSIBLE -- FROM VAGUE TO PRECISE

----------------------------------------------------------------------

Proving that theoretical model is fulfilling formal practical requirements is done in phases.

★ PHASE 1: Real life experiments, reasoning and formal requirements, input - black box - output modelling, algorithm prototype simulation

★ PHASE 2: Construction of the ideal test-case database, code and the prototype refining until the simplest most trivial test case is not correctly performed.

★ PHASE 3: Try the alpha prototype code with gradually more complicated test-case data, refining the code until all test cases are not correctly executed, and the simulation has provided desired and anticipated results.

It is important NOT to add new features, which is useless when core features are not stable. Do NOT add new features if core features is still buggy!

★ PHASE 4: The beta code testing is run on real-life data; and we monitor and control if the code is correctly executed; and during the process we try to capture all exceptions, discrepancies, anomalies, alerts, reports and crush results; in order to construct a test-case database of reproducible anomalies & crushes situations.

★ PHASE 5: Induced crush testing before 1.0 release. We are committed to provide functionality and service that meet or exceed promised performance, and the use of real-life data with some random garbage input. Testing will be performed to prove such functionality in the induced stress conditions.

-----------------------------

QUALITY REQUIREMENTS

-----------------------------

Whatever the approach to software development may be, the final software must satisfy some fundamental quality requirements. The following software properties are among the most relevant:

* Efficiency/performance: the amount of system resources a program consumes (processor time, memory space, slow devices such as disks, network bandwidth and to some extent even user interaction): the less, the better. This also includes correct disposal of some resources, such as cleaning up temporary files and lack of memory leaks.

* Reliability: how often the results of a program are correct. This depends on conceptual correctness of algorithms, and minimization of programming mistakes, such as mistakes in resource management (e.g., buffer overflows and race conditions) and logic errors (such as division by zero or off-by-one errors).

* Robustness: how well software solution reacts to invalid input problems not due to programmer error. This includes situations such as incorrect, inappropriate or corrupt data, unavailability of needed resources such as memory, operating system services and network connections, and user error.

* Usability: the ergonomics of a program: the ease with which a person can use the program for its intended purpose, or in some cases even unanticipated purposes. Such issues can make or break its success even regardless of other issues. This involves a wide range of textual, graphical and sometimes hardware elements that improve the clarity, intuitiveness, cohesiveness and completeness of a program's user interface.

* Portability: the range of computer hardware and operating system platforms on which the source code of a program can be compiled/interpreted and run. This depends on differences in the programming facilities provided by the different platforms, including hardware and operating system resources, expected behaviour of the hardware and operating system, and availability of platform specific compilers (and sometimes libraries) for the language of the source code

* Maintainability: the ease with which a program can be modified by its present or future developers in order to make improvements or customizations, fix bugs and security holes, or adapt it to new environments. Good practices during initial development make the difference in this regard. This quality may not be directly apparent to the end user but it can significantly affect the fate of a program over the long term.

=======================

TestDrivenDevelopment

=======================

Martin Fowler

5 March 2005

Test Driven Development (TDD) is a design technique that drives the development process through testing. In essence you follow three simple steps repeatedly:

1. Write a test for the next bit of functionality you want to add.

2. Write the functional code until the test passes.

3. Refactor both new and old code to make it well structured.

You continue cycling through these three steps, one test at a time, building up the functionality of the system. Writing the test first, what XPE2 calls Test First Programming, provides two main benefits. Most obviously it's a way to get SelfTestingCode, since you can only write some functional code in response to making a test pass. The second benefit is that thinking about the test first forces you to think about the interface to the code first. This focus on interface and how you use a class helps you separate interface from implementation.

The most common way that I hear to screw up TDD is neglecting the third step. Refactoring the code to keep it clean is a key part of the process, otherwise you just end up with a messy aggregation of code fragments. (At least these will have tests, so it's a less painful result than most failures of design.)

The term "Test-Driven Development" was coined by Kent Beck who popuarlized the technique as part of Extreme Programming in the late 1990's.

---------------------------------------------------------------------------

http://watirmelon.com/2014/02/25/answer-will-it-work-over-does-it-work/

Software teams must continually answer two key questions to ensure they deliver a quality product:

Are we building the correct thing?

Are we building the thing correctly?

In recent times, I’ve noticed a seismic shift of a tester’s role on an agile software team from testing that the team is building the thing correctly to helping the team build the correct thing. That thing can be a user story, a product or even an entire company.

As Trish Khoo recently wrote:

“The more effort I put into testing the product conceptually at the start of the process, the less I effort I had to put into manually testing the product at the end”

It’s more valuable for a tester to answer ‘will it work?‘ at the start than ‘does it work?‘ at the end. This is because if you can determine something isn’t the correct something before development is started, you’ll save the development, testing and rework needed to actually build what is needed (or not needed).

==================================================

Get more out of your legacy systems: more performance, functionality, reliability, and manageability

Is your code easy to change? Can you get nearly instantaneous feedback when you do change it? Do you understand it? If the answer to any of these questions is no, you have legacy code, and it is draining time and money away from your development efforts.

In this book, Michael Feathers offers start-to-finish strategies for working more effectively with large, untested legacy code bases. This book draws on material Michael created for his renowned Object Mentor seminars: techniques Michael has used in mentoring to help hundreds of developers, technical managers, and testers bring their legacy systems under control.

The topics covered include

- Understanding the mechanics of software change: adding features, fixing bugs, improving design, optimizing performance

- Getting legacy code into a test harness

- Writing tests that protect you against introducing new problems

- Techniques that can be used with any language or platform

- Accurately identifying where code changes need to be made

- Coping with legacy systems that aren't object-oriented

- Handling applications that don't seem to have any structure

This book also includes a catalog of twenty-four dependency-breaking techniques that help you work with program elements in isolation and make safer changes.

http://www.amazon.com/dp/B005OYHF0A/ref=cm_sw_su_dp

|

|